項目介紹

可以下載doc,ppt,pdf.對于doc文檔可以下載,doc中的表格無法下載,圖片格式的文檔也可以下載.ppt和pdf是先下載圖片再放到ppt中.只要是可以預覽的都可以下載。

已有功能

- 將可以預覽的word文檔下載為word文檔,如果文檔是掃描件,同樣支持.

- 將可以預覽的ppt和pdf下載為不可編輯的ppt,因為網頁上只有圖片,所以理論上無法下載可編輯的版本.

環境安裝

|

1

2

3

4

5

6

7

|

pip install requestspip install my_fake_useragentpip install opencv-pythonpip install python-pptxpip install seleniumpip install scrapy |

本項目使用的是chromedriver控制chrome瀏覽器進行數據爬取的的,chromedriver的版本和chrome需要匹配

Windows用看這里

1. 如果你的chrome瀏覽器版本恰好是87.0.4280,那么恭喜你,你可以直接看使用方式了,因為我下載的chromedriver也是這個版本

2. 如果不是,你需要查看自己的chrome瀏覽器版本,然后到chromedriver下載地址:http://npm.taobao.org/mirrors/chromedriver/ 這個地址下載對應版本的chromedriver,比如你的瀏覽器版本是87.0.4280,你就可以找到87.0.4280.20/這個鏈接,如果你是windows版本然后選擇chromedriver_win32.zip進行下載解壓。千萬不要下載LASEST——RELEASE87.0.4280這個鏈接,這個鏈接沒有用,之前有小伙伴走過彎路的,注意一下哈。

3. 用解壓好的chromedriver.exe替換原有文件,然后跳到使用方式

ubuntu用戶看這里

講道理,你已經用ubuntu了,那位就默認你是大神,你只要根據chrome的版本下載對應的chromdriver(linux系統的),然后把chromedriver的路徑改稱你下載解壓的文件路徑就好了,然后跳到使用方式。哈哈哈,我這里就偷懶不講武德啦

使用方式:

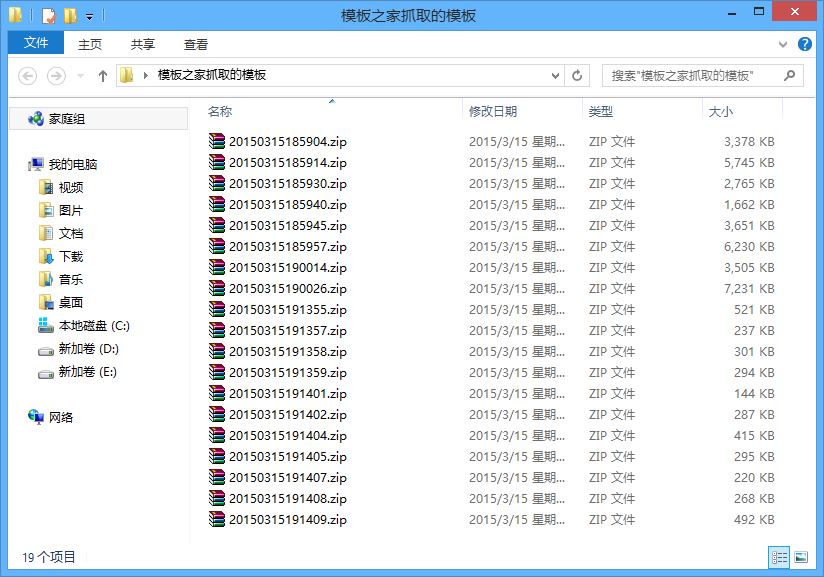

把代碼中的url改為你想要下載的鏈接地址,腳本會自動文檔判斷類型,并把在當前目錄新建文件夾并把文件下載到當前目錄。

主要代碼

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

|

import osimport timefrom selenium import webdriverfrom selenium.webdriver.common.desired_capabilities import DesiredCapabilitiesfrom scrapy import Selectorimport requestsfrom my_fake_useragent import UserAgentimport docxfrom docx.shared import Inchesimport cv2from pptx import Presentationfrom pptx.util import Inches#dows是的chromedriverchromedriver_path = "./chromedriver.exe"#用ubuntu的chromedriver# chromedriver_path = "./chromedriver"doc_dir_path = "./doc"ppt_dir_path = "./ppt"# url = "https://wenku.baidu.com/view/4410199cb0717fd5370cdc2e.html?fr=search"# doc_txt p# url = "https://wenku.baidu.com/view/4d18916f7c21af45b307e87101f69e314332fa36.html" # doc_txt span# url = "https://wenku.baidu.com/view/dea519c7e53a580216fcfefa.html?fr=search" # doc_txt span br# url = 'https://wk.baidu.com/view/062edabeb6360b4c2e3f5727a5e9856a5712262d?pcf=2&bfetype=new' # doc_img# url = "https://wenku.baidu.com/view/2af6de34a7e9856a561252d380eb6294dd88228d"# vip限定doc# url = "https://wenku.baidu.com/view/3de365cc6aec0975f46527d3240c844769eaa0aa.html?fr=search" #ppt# url = "https://wenku.baidu.com/view/18a8bc08094e767f5acfa1c7aa00b52acec79c55"#pdf# url = "https://wenku.baidu.com/view/bbe27bf21b5f312b3169a45177232f60dccce772"# url = "https://wenku.baidu.com/view/5cb11d096e1aff00bed5b9f3f90f76c660374c24.html?fr=search"# url = "https://wenku.baidu.com/view/71f9818fef06eff9aef8941ea76e58fafab045a6.html"# url = "https://wenku.baidu.com/view/ffc6b32a68eae009581b6bd97f1922791788be69.html"url = "https://wenku.baidu.com/view/d4d2e1e3122de2bd960590c69ec3d5bbfd0adaa6.html"class DownloadImg(): def __init__(self): self.ua = UserAgent() def download_one_img(self, img_url, saved_path): # 下載圖片 header = { "User-Agent": "{}".format(self.ua.random().strip()), 'Connection': 'close'} r = requests.get(img_url, headers=header, stream=True) print("請求圖片狀態碼 {}".format(r.status_code)) # 返回狀態碼 if r.status_code == 200: # 寫入圖片 with open(saved_path, mode="wb") as f: f.write(r.content) print("download {} success!".format(saved_path)) del r return saved_pathclass StartChrome(): def __init__(self): mobile_emulation = {"deviceName": "Galaxy S5"} capabilities = DesiredCapabilities.CHROME capabilities['loggingPrefs'] = {'browser': 'ALL'} options = webdriver.ChromeOptions() options.add_experimental_option("mobileEmulation", mobile_emulation) self.brower = webdriver.Chrome(executable_path=chromedriver_path, desired_capabilities=capabilities, chrome_options=options) # 啟動瀏覽器,打開需要下載的網頁 self.brower.get(url) self.download_img = DownloadImg() def click_ele(self, click_xpath): # 單擊指定控件 click_ele = self.brower.find_elements_by_xpath(click_xpath) if click_ele: click_ele[0].location_once_scrolled_into_view # 滾動到控件位置 self.brower.execute_script('arguments[0].click()', click_ele[0]) # 單擊控件,即使控件被遮擋,同樣可以單擊 def judge_doc(self, contents): # 判斷文檔類別 p_list = ''.join(contents.xpath("./text()").extract()) span_list = ''.join(contents.xpath("./span/text()").extract()) # # if span_list # if len(span_list)>len(p_list): # xpath_content_one = "./br/text()|./span/text()|./text()" # elif len(span_list)<len(p_list): # # xpath_content_one = "./br/text()|./text()" # xpath_content_one = "./br/text()|./span/text()|./text()" if len(span_list)!=len(p_list): xpath_content_one = "./br/text()|./span/text()|./text()" else: xpath_content_one = "./span/img/@src" return xpath_content_one def create_ppt_doc(self, ppt_dir_path, doc_dir_path): # 點擊關閉開通會員按鈕 xpath_close_button = "//div[@class='na-dialog-wrap show']/div/div/div[@class='btn-close']" self.click_ele(xpath_close_button) # 點擊繼續閱讀 xpath_continue_read_button = "//div[@class='foldpagewg-icon']" self.click_ele(xpath_continue_read_button) # 點擊取消打開百度app按鈕 xpath_next_content_button = "//div[@class='btn-wrap']/div[@class='btn-cancel']" self.click_ele(xpath_next_content_button) # 循環點擊加載更多按鈕,直到顯示全文 click_count = 0 while True: # 如果到了最后一頁就跳出循環 if self.brower.find_elements_by_xpath("//div[@class='pagerwg-loadSucc hide']") or self.brower.find_elements_by_xpath("//div[@class='pagerwg-button' and @style='display: none;']"): break # 點擊加載更多 xpath_loading_more_button = "//span[@class='pagerwg-arrow-lower']" self.click_ele(xpath_loading_more_button) click_count += 1 print("第{}次點擊加載更多!".format(click_count)) # 等待一秒,等瀏覽器加載 time.sleep(1.5) # 獲取html內容 sel = Selector(text=self.brower.page_source) #判斷文檔類型 xpath_content = "//div[@class='content singlePage wk-container']/div/p/img/@data-loading-src|//div[@class='content singlePage wk-container']/div/p/img/@data-src" contents = sel.xpath(xpath_content).extract() if contents:#如果是ppt self.create_ppt(ppt_dir_path, sel) else:#如果是doc self.create_doc(doc_dir_path, sel) # a = 3333 # return sel def create_ppt(self, ppt_dir_path, sel): # 如果文件夾不存在就創建一個 if not os.path.exists(ppt_dir_path): os.makedirs(ppt_dir_path) SLD_LAYOUT_TITLE_AND_CONTENT = 6 # 6代表ppt模版為空 prs = Presentation() # 實例化ppt # # 獲取完整html # sel = self.get_html_data() # 獲取標題 xpath_title = "//div[@class='doc-title']/text()" title = "".join(sel.xpath(xpath_title).extract()).strip() # 獲取內容 xpath_content_p = "//div[@class='content singlePage wk-container']/div/p/img" xpath_content_p_list = sel.xpath(xpath_content_p) xpath_content_p_url_list=[] for imgs in xpath_content_p_list: xpath_content = "./@data-loading-src|./@data-src|./@src" contents_list = imgs.xpath(xpath_content).extract() xpath_content_p_url_list.append(contents_list) img_path_list = [] # 保存下載的圖片路徑,方便后續圖片插入ppt和刪除圖片 # 下載圖片到指定目錄 for index, content_img_p in enumerate(xpath_content_p_url_list): p_img_path_list=[] for index_1,img_one in enumerate(content_img_p): one_img_saved_path = os.path.join(ppt_dir_path, "{}_{}.jpg".format(index,index_1)) self.download_img.download_one_img(img_one, one_img_saved_path) p_img_path_list.append(one_img_saved_path) p_img_max_shape = 0 for index,p_img_path in enumerate(p_img_path_list): img_shape = cv2.imread(p_img_path).shape if p_img_max_shape<img_shape[0]: p_img_max_shape = img_shape[0] index_max_img = index img_path_list.append(p_img_path_list[index_max_img]) print(img_path_list) # 獲取下載的圖片中最大的圖片的尺寸 img_shape_max=[0,0] for img_path_one in img_path_list: img_path_one_shape = cv2.imread(img_path_one).shape if img_path_one_shape[0]>img_shape_max[0]: img_shape_max = img_path_one_shape # 把圖片統一縮放最大的尺寸 for img_path_one in img_path_list: cv2.imwrite(img_path_one,cv2.resize(cv2.imread(img_path_one),(img_shape_max[1],img_shape_max[0]))) # img_shape_path = img_path_list[0] # 獲得圖片的尺寸 # img_shape = cv2.imread(img_shape_path).shape # 把像素轉換為ppt中的長度單位emu,默認dpi是720 # 1厘米=28.346像素=360000 # 1像素 = 12700emu prs.slide_width = img_shape_max[1] * 12700 # 換算單位 prs.slide_height = img_shape_max[0] * 12700 for img_path_one in img_path_list: left = Inches(0) right = Inches(0) # width = Inches(1) slide_layout = prs.slide_layouts[SLD_LAYOUT_TITLE_AND_CONTENT] slide = prs.slides.add_slide(slide_layout) pic = slide.shapes.add_picture(img_path_one, left, right, ) print("insert {} into pptx success!".format(img_path_one)) # os.remove(img_path_one) for root,dirs,files in os.walk(ppt_dir_path): for file in files: if file.endswith(".jpg"): img_path = os.path.join(root,file) os.remove(img_path) prs.save(os.path.join(ppt_dir_path, title + ".pptx")) print("download {} success!".format(os.path.join(ppt_dir_path, title + ".pptx"))) def create_doc(self, doc_dir_path, sel): # 如果文件夾不存在就創建一個 if not os.path.exists(doc_dir_path): os.makedirs(doc_dir_path) # # 獲取完整html # sel = self.get_html_data() # 獲取標題 xpath_title = "//div[@class='doc-title']/text()" title = "".join(sel.xpath(xpath_title).extract()).strip() document = docx.Document() # 創建word文檔 document.add_heading(title, 0) # 添加標題 # 獲取文章內容 xpath_content = "//div[contains(@data-id,'div_class_')]//p" # xpath_content = "//div[contains(@data-id,'div_class_')]/p" contents = sel.xpath(xpath_content) # 判斷內容類別 xpath_content_one = self.judge_doc(contents) if xpath_content_one.endswith("text()"): # 如果是文字就直接爬 for content_one in contents: one_p_list = content_one.xpath(xpath_content_one).extract() p_txt = "" for p in one_p_list: if p==" ": p_txt += ('\n'+p) else: p_txt += p # content_txt_one = '*'.join(content_one.xpath(xpath_content_one).extract()) pp = document.add_paragraph(p_txt) document.save(os.path.join(doc_dir_path, '{}.docx'.format(title))) print("download {} success!".format(title)) elif xpath_content_one.endswith("@src"): # 如果是圖片就下載圖片 for index, content_one in enumerate(contents.xpath(xpath_content_one).extract()): # 獲取圖片下載路徑 content_img_one_url = 'https:' + content_one # 保存圖片 saved_image_path = self.download_img.download_one_img(content_img_one_url, os.path.join(doc_dir_path, "{}.jpg".format( index))) document.add_picture(saved_image_path, width=Inches(6)) # 在文檔中加入圖片 os.remove(saved_image_path) # 刪除下載的圖片 document.save(os.path.join(doc_dir_path, '{}.docx'.format(title))) # 保存文檔到指定位置 print("download {} success!".format(title))if __name__ == "__main__": start_chrome = StartChrome() # start_chrome.create_doc_txt(doc_dir_path) start_chrome.create_ppt_doc(ppt_dir_path, doc_dir_path) |

項目地址

https://github.com/siyangbing/baiduwenku

以上就是python實現百度文庫自動化爬取的詳細內容,更多關于python 爬取百度文庫的資料請關注服務器之家其它相關文章!

原文鏈接:https://github.com/siyangbing/baiduwenku